In the past several years, there's been steady advancement in the use of artificial intelligence & machine learning pretty much all across the board. This has been the case for quite some time in the field of photography, given the amount of data that your camera needs to process.

AI can help speed this up and has been doing so more & more effectively with time. Recently, this implementation has begun spreading to editing software & art - let’s talk about that.

Machine Learning at your Fingertips

Let’s get technical just here for a short bit - this will give you the setup you need to consider the rest.

While the technology has obviously existed in many ways before then, it is quite safe to regard tech giant Nvidia’s introduction of its RTX 2000 series graphics cards as the first leap into mass consumer access to “usable” machine learning-ready hardware.

The majority of cards before then did not have the adequate processing required to maximize their potential… and that’s where Tensor cores came in.

To summarize, they’re the cores responsible for accelerating machine learning and AI that is now featured in most Nvidia cards released today. By necessity, to compete in the market, most other graphics card manufacturers took notice and began developing their versions of the same hardware.

Long story short, if you’ve bought a relatively well-specced computer in the last few years, chances are that you have this technology already built into your laptop or PC.

As it became more plausible to own and experiment on this kind of technology without owning a supercomputer, various development teams have been researching ways to apply its use on the wider market - be it for profit or science.

This was a partially intended outcome for Nvidia, as they would’ve stood to make the most out of a rapidly advancing market (being one of its sole suppliers.) Thus, we’ve been seeing the adoption of these cards subsequently cause various industries to flourish, and photography has definitely been one of them.

With the increase of computer users who happen to have this technology at hand, it has become viable for tech companies to start offering software & services that take great advantage of it.

Thanks to cloud servers, even if you don’t have the raw processing power to do advanced AI calculations on your own, most of them can be sent off to some big data center that does.

Painting a picture

The kind of implementation mentioned above is what gave rise to the popularity of AI text-to-art generation - anyone can try it on almost any kind of device, and all it takes is just a bit of your time. Give Dall-E Mini a try - it’s among the earliest, most lightweight generators and is browser-based. We’ll wait for you here :)

There are plenty of similar, more advanced versions of that technology now, some producing results that appear almost lifelike minus a few inconsistencies (AI still really doesn’t know what to do with human faces, for example).

Still, we’ll likely cross that barrier too. It’s no wonder that the various software services you may use to edit & cull your photos have also started implementing AI into their toolkits.

Notably, there are now options such as AI text-generated backdrops in Photoshop, or Luminar AI, a plugin that can completely change how some photos look with minimal effort. It is of no coincidence that such options appeared in the same time frame as all of the other technological advancements we’ve covered.

Photography - a modern illusion?

So, what has all of this been leading up to? A question to you, the photographer - one that only you can answer for yourself.

With all of the automation at hand, AI photo-taking, AI photo editing, and soon possibly AI designing, is it still right to call your end product a photograph?

It’s easy to paint this as just an extension of that semantic quandary of the 2000s regarding Photoshop and whether a highly edited photo is still a photo, but there is a key difference - there was still a person on the other end of a screen doing all of that work. There was clear intent to work with the original file one would provide.

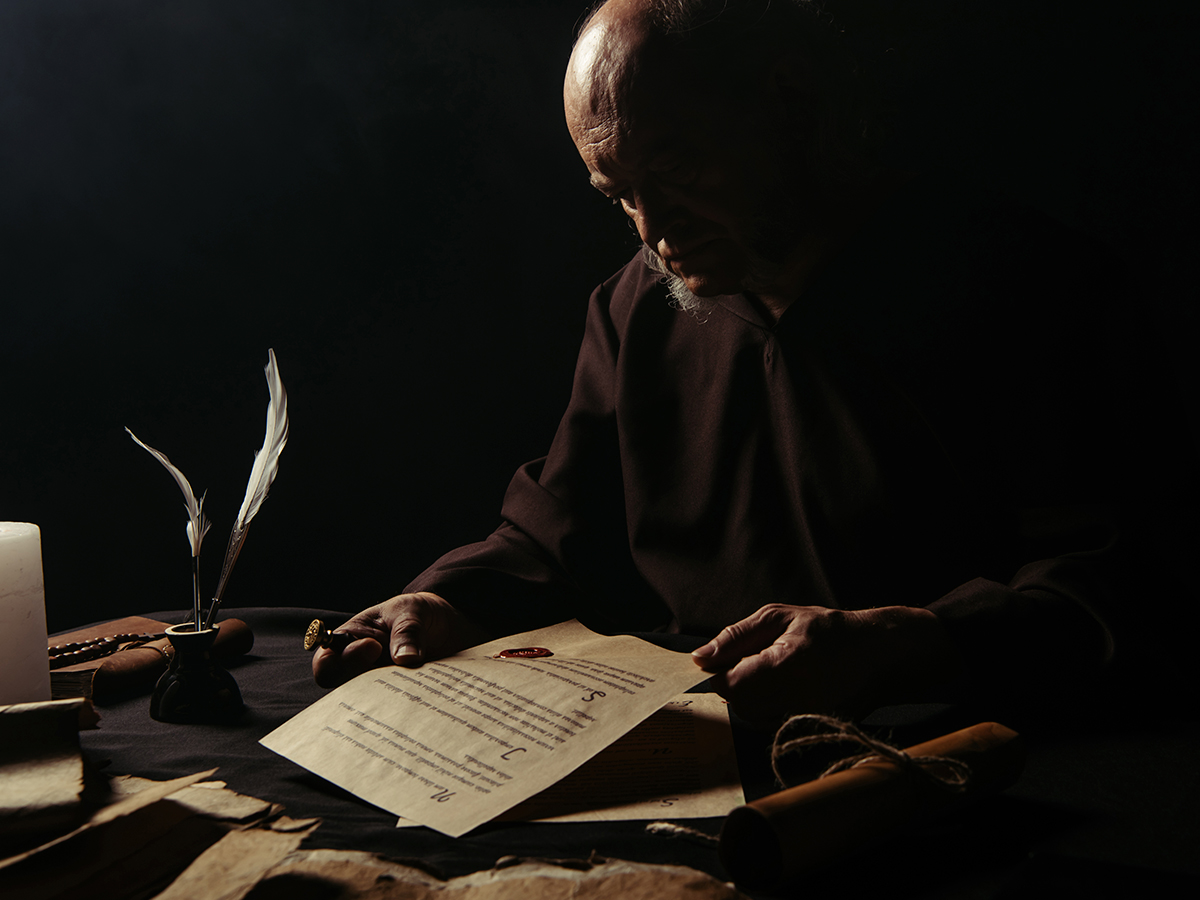

Will traditional editing methods be viewed as archaic and inefficient, just as manuscript writing in lieu of the printing press?

Given how some of these AI-powered changes only involve the click of a few buttons to change your image or even radically redesign it, the amount of human involvement in these editing decisions is rapidly decreasing - and it’s plausible that in the near future, the majority of human decision-making during a typical editing session will revolve around whether you accept the results you were provided by a machine or not, like most already do with high-tech cameras.

This isn’t to say that it’s no longer your photo, you still created the original inspiration for whatever becomes of it by the end of that hypothetical editing session - with the help of AI-powered cameras, yes, but it is you who exerted the sheer will onto that camera nevertheless.

Some have considered this kind of photography to be a sub-genre of digital art, yet this is ultimately a subjective view - so, in terms of automating photo editing, is a hardline stance required? Are some changes still acceptable in terms of classifying the result as a photo? Or is anything that started as a photo still considered as such?

The pro photography industry’s involvement in this open debate will no doubt depend on whether the technology will be widely adopted by businesses as a whole or whether it’ll remain as nothing more than a niche addition to your already-impressive toolkit of editing software.